I thought to put some definition or short description of Kubernetes terms for my reference. Detailed information you can obviously find on

https://kubernetes.io/docs/concepts/.

Hope you will find it good too.

Before I explain Kubernetes, I think it is much useful to understand

that what is Container. I know that web is already full with such

definitions so I will try to explain in much shorter and easiest way.

So Let's start with

Container. Below image is self-explanatory. Some people refer it as VM but difference is pretty clear in below image.

Hope it is clear to you that why it is more useful to use containers. It

is faster, remove dependency of guest OS and it doesn't bother if

target device is private datacenter, a public cloud or developer's

personal laptop. In container, we can simply deploy our application

without the need of any hosting OS.

So now, when we know a bit about container, let's think about what is

Kubernetes.

So, to explain kubernetes, let's take classic example of three tier

application that is web, app and db. Now each application hosted on

different container. Web is on container A, app is on container B and

the db is on container C (for example).

Now, to deploy these lightweight application, there would be multiple

steps involved and also to do day-2 operations like upgrading or

upscaling etc. So, to do all these tasks in quick manner we must have

some container orchestration technique. isn't it? so that we can avoid

any manual task and human error kind of things.

So, Kubernetes is an open source container orchestration system for

automating deployment, scaling and management of containerized

application. It was originally designed by google and now is being

managed by Cloud Native Computing Foundation. Kubernetes basically a

cluster-based solution which involve

Kubernetes master and

Kubernetes nodes aka workers or minions.

Let's explore Kubernetes bit more and understand its components-

Kubernetes Master Components

1. API Server: API server is the target for all external API

client like K8 CLI client. This external or internal components like

controller manager, dashboard, scheduler also talks to API server.

2. K8 Scheduler: A scheduler watches for newly created Pods that have no Node assigned. For

every Pod that the scheduler discovers, the scheduler becomes responsible

for finding the best Node for that Pod to run on. If you want to know more about it then click

here to explore more.

3. Controller Manager: The Controller Manager is a daemon that

embeds the core control loops shipped with Kubernetes. A controller is a

control loop that watches the shared state of the cluster through the

API server and makes changes attempting to move the current state

towards the desired state.

4. Etcd: is the consistent and highly-available key value store used as Kubernetes backing store for all cluster data.

Kubernetes Node Components

1. Kubelet - It is the primary node agent that runs on each node.

It works in terms of PodSpec. A PodSpec is a YAML or JSON object that

describe a

POD

2. The container runtime (c runtime): is the engine that is

responsible for running containers. Kubernetes supports several

container runtimes like Docker, which is the most adopted one.

3. The Kube-Proxy: enables the Kubernetes service abstraction by

maintaining network rules on the host and performing connection

forwarding. It implements east/west load-balancing on the nodes using

IPTables.

Kubernetes Namespace

Kubernetes supports multiple virtual clusters backed by the same physical cluster.

These virtual clusters are called namespaces.

Click here to know more about Kubernetes namespace.

Kubernetes POD with example

Kubernetes POD is group of one or more containers. Containers within a

POD share an IP address and port space and can find each other via

localhost. They can also communicate with each other using standard

inter-process communication like system-V and Semaphore or POSIX shared

memory. Containers in a POD also shares the same data volume. Pods are a

model of the pattern of multiple cooperating processes which form a

cohesive unit of service and serve as unit of deployment, horizontal

scaling, and replication. Co-Location (co-scheduling), shared fate

(e.g. termination), coordinated replication.

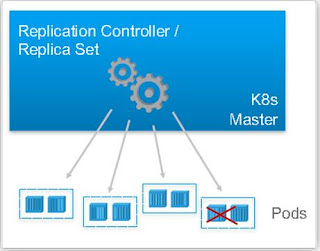

Kubernetes Controllers

A Replication Controller enforces the 'desired' state of a collection of

Pods. E.g. it makes sure that 4 Pods are always running in the cluster.

If there are too many Pods, it will kill some. If there are too few,

the Replication Controller will start more.

A

Replica Set is the

next-generation Replication Controller. The only difference between a

Replica Set and a Replication Controller right now is the selector

support. Replica Set supports the new set-based selector requirements

whereas a Replication Controller only supports equality-based selector

requirements.

A

Deployment Controller provides

declarative updates for Pods and Replica Sets. You describe a desired

state in a Deployment object, and the Deployment controller changes the

actual state to the desired state at a controlled rate. You can define

Deployments to create new Replica Sets, or to remove existing Deployments

and adopt all their resources with new Deployments.

Kubernetes Service- A Kubernetes Service is an abstraction which

defines a logical set of Pods and a policy by which to access them

(sometimes called a micro-service). The set of Pods targeted by a

Service is (usually) determined by a Label Selector.

A Kubernetes

Service is an

abstraction which defines a logical set of Pods and a policy by which to

access them (sometimes called a micro-service). The set of Pods

targeted by a Service is (usually) determined by a Label Selector.

The kube-proxy watches the Kubernetes master for the

addition and removal of Service and Endpoints objects. For each Service,

it installs iptables rules which capture traffic to the Service’s

clusterIP (which is virtual) and Port and redirects that traffic to one

of the Service’s backend sets. For each Endpoints object, it installs

iptables rules which select a backend Pod. By default, the choice of

backend is random.

With NSX-T and the NSX Container Plugin (NCP), we leverage

the NSX Kube-Proxy, which is a daemon running on the Kubernetes Nodes.

It replaces the native distributed east-west load balancer in

Kubernetes (the Kube-Proxy using IPTables) with Open vSwitch (OVS)

load-balancing features.

Please note that it is extremely important to choose correct versions of Ubuntu OS, Docker, Kubernetes, Open vSwitch, and NSX-T.

Reference compatibility checklist for this lab build-out:

https://tinyurl.com/y5vastd5

Kubernetes Ingress (Example)-

The Kubernetes

Ingress is an API

object that manages external access to the services in a cluster,

typically HTTP. Typically, services and pods have IPs only routable by

the cluster network. All traffic that ends up at an edge router is

either dropped or forwarded elsewhere. An Ingress is a collection of

rules that allow inbound connections to reach the cluster services.

It can be configured to give services externally-reachable

URLs, load balance traffic, terminate SSL, offer name based virtual

hosting, and more. Users request ingress by POSTing the Ingress resource

to the API server. An ingress controller is responsible for fulfilling

the Ingress, usually with a load balancer, though it may also configure

your edge router or additional front-ends to help handle the traffic in

an HA manner.

The most common open-source projects which allow us to do this are Nginx and HAProxy. Which looks like the image above.

In

this lab, we will work with the NSX-T native layer 7 load balancer to

provide this functionality, as you can see in the example image above.

Within Kubernetes there's also the External load balancer

object, not to be confused with the Ingress object. When creating a

service, you have the option of automatically creating a cloud network

load balancer. This provides an externally-accessible IP address that

sends traffic to the correct port on your cluster nodes provided your

cluster runs in a supported environment and is configured with the

correct cloud load balancer provider package.

Network Policies

A

Kubernetes Network Policy is a specification of how groups of pods are

allowed to communicate with each other and other network endpoints.

NetworkPolicy resources use labels to select pods and define rules

which specify what traffic is allowed to the selected pods.

Kubernetes Network policies are implemented by the network

plugin, so you must be using a networking solution which supports

NetworkPolicy - simply creating the resource without a controller to

implement it will have no effect.

By default, pods are non-isolated; they accept traffic from

any source. Pods become isolated by having a Kubernetes Network Policy

which selects them. Once there is a Kubernetes Network Policy in a

namespace selecting a particular pod, that pod will reject any

connections that are not allowed by a Kubernetes Network Policy. Other

pods in the namespace that are not selected by a Kubernetes Network

Policy will continue to accept all traffic.

Thank you,

vCloudNotes