Overview

It is three step process from version 9.5 to version 10.1.2. I would suggest to complete pre-requisites properly and it will be flawless process. You should check inter-operability first so that your other components can function with vCD versions you will be upgrading to. My experience says that during migration, first deploy the primary node → Transfer the DB → Replace custom certificates with self-signed certificates → Make sure your primary node up → Now add more nodes if you want to deploy multi-cell architecture → Change certificate.ks in standby nodes.

When we talk about Primary and standby nodes then it is only for Postgre DB which is active only on Primary node and will be Standby in standby nodes. VMware-vcd service will always be active-active in all three nodes (If you deploy minimum three nodes in multi-cell architecture). See below image.

Upgrade path will be-

Current 9.5 in-linux → In-place upgrade to 9.7 in-linux → Migrate to 9.7 appliance → Upgrade to version 10.1.2 appliance. You can check vendor doc for this upgrade path. Now when you know the workflow then let's proceed for planning phase.

Planning

First of anything, you should check the interoperability of your product versions. Click here for VMware InterOperability guide You need to plan your upgrade as per this guide. This phase is most important phase, I must say. If you plan with perfection then very less chances of failure are there. Let's see what all you need to plan-In-place upgrade is quite simple. There is no such complexity. All planning need is for Migration from in-linux to appliance

1. IP Addresses

There are two choices we have. You need to decide whether you want to change the existing IPs of existing vCD cells or you want to use new IPs on your new vCD cells. Why? Because you are going to deploy new cells for migration to appliance. I will describe in next steps. I used the existing IPs of existing cells. In case, you are using same IP addresses then

1. at the time of 9.7 appliance deployment you need to change the old cell's IP address to any temp but reachable IP address. This IP address should be reachable to your new cell as well as your existing external DB server. why? Because

1.1. This old cell IP address we will assign to new vCD cell's eth0 NIC

1.2. We still need old vcd cell (anyone) for DB migration that's why it must be reachable to new cell and your external DB server

2. You need to free IPs from all of your old three cells so that we can assign same three IPs to all three new 9.7 appliance node's eth0 nics.

3. You need to change DNS entries for you old cells with new temp IPs and then create DNS (Host and PTR records) for new cells with old IP addresses. Any confusion? comment pls.

4. You need to create different VLAN for IPs of eth1 of all three new vcd cells, if you already don't have it.

2. Network Route

It is quite crucial part of this migration. In old vCD cells there used to be three different NICs holding different traffics like HTTPS, VMRC, NFS etc. but in vCD 9.7 appliance, each cell will be having two NICs only holding these services. You need to ensure that both these NICs must be on different VLANs\subnets. Now, you need to ensure that your new vCD cells's eth1 can reach your NFS server and for that if require you need to configure static routes as per your network flow.

3. Single cell or Multi cell Architecture

You need to decide and plan your upgrade according to this point. Additional points to be taken care are

It is quite crucial part of this migration. In old vCD cells there used to be three different NICs holding different traffics like HTTPS, VMRC, NFS etc. but in vCD 9.7 appliance, each cell will be having two NICs only holding these services. You need to ensure that both these NICs must be on different VLANs\subnets. Now, you need to ensure that your new vCD cells's eth1 can reach your NFS server and for that if require you need to configure static routes as per your network flow.

3. Single cell or Multi cell Architecture

You need to decide and plan your upgrade according to this point. Additional points to be taken care are

- Check out Load-balancer configuration. It might needs to modify post vcd 9.7 deployment.

- Do you have more than one LBs that balanced different traffics. For example, Internet and Intranet

- You need to deploy additional nodes at very last stage that is after certificate replacement and making first node fully up

- You need to deploy additional nodes at very last stage that is after certificate replacement and making first node fully up

4. NFS

NFS is another critical part of this migration. Some guys might have NFS on Linux Machine, some might have on Windows and some might have it on direct storage box.

You need to make sure that while deploying first Primary node in version 9.7 appliance, NFS mount point must be empty otherwise 9.7 deployment will fail. You need to ensure that you NFS server must be reachable from the eth1 NIC of all vcd nodes in 9.7 appliance deployment.

I guess, I have given enough clues to help you plan this migration. Now, let's see all steps one by one.

In-place upgrade from 9.5 to 9.7

Pre-requisites- Make sure that upgrade.bin file is uploaded in any directory in vCD cell(s) and user has enough permission on it.

- Do a md5sum check. Command is

# md5sum installation-file.bin

Main activity

You need to run all below command on all vcd cells one-by-one

Main activity

You need to run all below command on all vcd cells one-by-one

Step 1 : Stop vCD services on all cells using ./cell-management-tool

To see the current status

./cell-management-tool -u administrator -p 'password' cell --status

To stop coming more tasks on it

./cell-management-tool -u administrator -p 'password' cell --quiesce true

To put it in maintenance mode

./cell-management-tool -u administrator -p 'password' cell --status

To stop coming more tasks on it

./cell-management-tool -u administrator -p 'password' cell --quiesce true

To put it in maintenance mode

./cell-management-tool -u administrator -p 'password' cell --maintenance true

Finally to shutdown vCD services

./cell-management-tool -u administrator -p 'password' cell -s

Step 2 : Take snapshot of all vCD cells

Step 2 : Take snapshot of all vCD cells

Step 3 : Take full backup of external MS SQL database

Step 4 : Take ownership on downloaded .bin file. Command is

#chmod u+x installation-file.bin

Step 4 : Install the upgrade file now

#./installation-file.bin

If you have placed .bin file in /tmp folder then change the location in CLI and then run the command

It is a simple one. No such complexity.

Step 4 : Take ownership on downloaded .bin file. Command is

#chmod u+x installation-file.bin

Step 4 : Install the upgrade file now

#./installation-file.bin

If you have placed .bin file in /tmp folder then change the location in CLI and then run the command

It is a simple one. No such complexity.

Best Practices for next migration

1. Segregation of traffic should be as below. I did it like this so sharing it as my personal recommendations. You can chose other way round as well. vCD 9.7 appliance eth0 eth1

Primary HTTPS+VMRC+API PostgreDB+NFS

Standby HTTPS+VMRC+API PostgreDB+NFS

Standby HTTPS+VMRC+API PostgreDB+NFS

2. If primary is deployed as "Primary-Large" then all standby cells must be deployed as "Standby-Large". If primary is deployed as "Primary-small" then all standby cells must be deployed as "Standby-small".

3. In multicell architecture, standby cells should be deployed at very last stage that is after making first cell up and running fully functional and after replacing the certificates. It will make things simple.

4. Both NICs of vcd 9.7 appliance cells must be on different VLANs

3. In multicell architecture, standby cells should be deployed at very last stage that is after making first cell up and running fully functional and after replacing the certificates. It will make things simple.

4. Both NICs of vcd 9.7 appliance cells must be on different VLANs

Migrate from ver 9.7 In-linux to 9.7 appliance

Pre-requisites1. Clean and accessible NFS mount share. Must be accessible from eth1 nic if you are planning to transfer NFS traffic to eth1. I did the same.

2. Accurate DNS entries for new vcd cells with old IPs (If you don't plan to change the existing IPs)

3. If you have customized certificates then have passwords of keystore, https and console proxy.

4. Make sure that network flows are opened between new vcd cell's eth1 nic and old cell. Follow vcd 9.7 Install guide attached in last of this post

5. Configure AMQP with version 3.7

5. Configure AMQP with version 3.7

6. Here is the vendor documentation for all pre-requisites

7. Your production downtime will start when you will change the production IP of old vcd cell here. We will assign this IP to new primary cell.

Start the vCloud Director Appliance Deployment : Primary Node

1. Start deploying an ova as usual. A wizard will open → Give vCD cell a valid name → Give it folder location → Compatible datastore → Underlying ESXi host → Select eth1 and eth0 portgroups → Click next to complete customized template wizard →

Under Customized template, fill the following-

Under Customized template, fill the following-

NTP Server to use: 8.8.8.8

Initial Root Password: VMware1!

Expire Root Password Upon First Login: uncheck

Enable SSH root login: check

NFS mount for transfer file location: IPaddress:/sharename

'vcloud' DB password for the 'vcloud' user: VMware1!

Admin User Name: administrator

Admin Full Name: vCD Admin

Admin user password: VMware1!

Admin email: vcd97@vcnotes.in

System name: vcd4

Installation ID: 12

eth0 Network Routes: blank

eth1 Network Routes: blank

Default Gateway: 172.17.2.1

Domain Name: vcnotes.in

Domain Name Servers: 8.8.8.8,8.8.4.4

eth0 Network IP Address: 172.17.2.21

eth0 Network Netmask: 255.255.255.224

eth1 Network IP Address: 172.17.2.22

eth1 Network Netmask: 255.255.255.240

You need to modify above detail as per your environment. Few doubts you might have-

System Name - For first primary node, you can put vcd1

Installation ID - In case of Brownfiled setup, note the installation id from running setup and put same here

eth0 and eth1 Network - It is to put static routes according to your network design

Installation ID - In case of Brownfiled setup, note the installation id from running setup and put same here

eth0 and eth1 Network - It is to put static routes according to your network design

Once all info is given, review it and click on Finish.

Glad to see vendor's documentation here. Refer to page number 54.

Note that:

1. You need not to change installation ID on each and every standby cell. Installation ID is the ID which vCD uses to generate unique mac addresses for vCD VMs. I have seen few blogs asking to change it for standby nodes. This is totally incorrect.

2. Domain Name and Domain search path will be same as vcnotes.in. It should not be like vcdcell01.vcnotes.in. When you put the VM name at starting of deployment then DNS automatically generate FQDN.

Post-Checks-

1. Once ova deployment is finished then access SSH. If SSH is not responding the access console and start sshd service. Service sshd start. Check below logs to ensure everything is good.

#cat /opt/vmware/var/log/firstboot

#cat /opt/vmware/var/log/vcd/setupvcd.log

#cat /opt/vmware/var/log/vami/vami-ovf.log

If everything went well during deployment then firstboot logs will show you the success mark otherwise it will refer to check setupvcd.log and then vami-ovf.log

2. Browse VAMI interface https://IP_FQDN_of_primary_cell:5480. It should be like below

3. Browse https://IP_FQDN_of_primary_cell/cloud and https://IP_FQDN_of_primary_cell/provider. All portals will be accessible and without any error

Start the vCloud Director Appliance Deployment : Standby Node

Not Now :)

Once your primary cell is deployed then don't deploy standby node right after. Now, its time to transfer the DB.

Take backup of internal embedded postgres database

#/opt/vmware/appliance/bin/create-db-backup

Configure External Access to the vCloud Director Database

1. Stop vCD services on all cells including one primary and old three cells.

2. SSH to new vcd cell and create a file with name external.txt in /opt/vmware/appliance/etc/pg_hba.d with below command

#vi /opt/vmware/appliance/etc/pg_hba.d/external.txt

Now add below colored content in external.txt file

#TYPE DATABASE USER ADDRESS METHOD

host vcloud vcloud 172.25.2.194/32 md5

host vcloud vcloud 172.25.2.209/32 md5

host vcloud vcloud 172.25.2.209/32 md5

Note that : IP address 172.25.2.194/32 is IP address of you old external DB server with CIDR value. IP address 172.25.2.209 is the IP address of eth1 NIC of old vcd cell. Refer to Page number 82 and 83 in vcd 9.7 Install guide attached in last of this post.

You can ensure proper update of above created file by checking file pg_hba.conf. Just run #cat pg_hba.conf and it should show the entries you just made in above steps

3. SSH to old vcd cell and run below command. Refer to vCD 9.7 Install guide page number 122.

/opt/vmware/vcloud-director/bin/cell-management-tool dbmigrate -dbhost eth1_IP_new_primary \

-dbport 5432 -dbuser vcloud -dbname vcloud -dbpassword database_password_new_primary

What you need to modify in above command is-

eth1_IP_new_primary - It is eth1 IP address of new primary vcd appliance cell

database_password_new_primary - it is the database password given while deploying the primary vcd node

-dbname - It should be vcloud only if you haven't changed intentionally.

/ - Many get confused with this /, it is just in VMware documentation which means next line. Doesn't matter if you use it or not.

Rest info should be understood and can be used as it is. If all went well then it will transfer your external SQL DB to embedded postgreSQL.

Transfer Certificates from Old Cell and Integrate it to New Primary Cells

1. On the migration source copy all the following files from old vcd cell to new vcd cell. Do not edit any entries in these files in this process. Use WinSCP to move the files between the two devices. Rename the file to cerificates.ks.migrated to avoid any confusion before paste it into new vcd cell.

/opt/vmware/vcloud-director/certificates.ks

/opt/vmware/vcloud-director/etc/certificates

/opt/vmware/vcloud-director/etc/global.properties

/opt/vmware/vcloud-director/etc/proxycertificates

/opt/vmware/vcloud-director/etc/responses.properties

/opt/vmware/vcloud-director/etc/truststore

2. Create a new directory in new vCD cell and paste above files there

mkdir /root/tempCerts

3. Change the ownership of vcloud user on above files

3. Change the ownership of vcloud user on above files

chown vcloud:vcloud /root/tempCerts/*

4. On the new appliance, now rename all existing files to keep them for reference

cd /opt/vmware/vcloud-director/etc/

mv certificates certificates

mv global.properties global.properties

mv proxycertificates proxycertificates

mv responses.properties responses.properties

mv truststore truststore

I didn't renamed /opt/vmware/vcloud-director/certificates.ks here because I have already renamed it in step 1.

5. On the new appliance copy the files from /root/tempCerts to their respective directories.

mv /root/tempCerts/certificates.ks.migrated /opt/vmware/vcloud-director/

mv /root/tempCerts/certificates /opt/vmware/vcloud-director/etc/

mv /root/tempCerts/global.properties /opt/vmware/vcloud-director/etc/

mv /root/tempCerts/proxycertificates /opt/vmware/vcloud-director/etc/

mv /root/tempCerts/responses.properties /opt/vmware/vcloud-director/etc/

mv /root/tempCerts/truststore /opt/vmware/vcloud-director/etc/

Here, you have transferred all the certificates from old to new cell and now this is the time to run configure command so that new vCD primary appliance can use these certificates.

6. Below is the command.

Before this, note that /opt/vmware/vcloud-director/certificates.ks (Customer certificate copied from old cell) is not in use because we have renamed it with certificates.ks.migrated. We will do all initial configurations with self-signed certificates and then will use custom certificate in last step.

/opt/vmware/vcloud-director/bin/configure --unattended-installation --database-type postgres --database-user vcloud --database-password db_password_new_primary --database-host eth1_ip_new_primary --database-port 5432 --database-name vcloud --database-ssl true --uuid --keystore /opt/vmware/vcloud-director/etc/certificates.ks --keystore-password root_password_new_primary --primary-ip appliance_eth0_ip --console-proxy-ip appliance_eth0_ip --console-proxy-port-https 8443

Hope above command is self-explanatory. If not, comment it and ask your doubt.

Once above command is successful one then you can follow next step

7. Start vCD services on first primary new cell

SSH to primary cell and start the vcd services

#service vmware-vcd start or #systemctl start vmware-vcd.services

You can monitor the progress of the cell startup at /opt/vmware/vcloud-director/logs/cell.log.

Update certificate.ks file

1. Rename the original certificates.ks file. You are renaming self-signed certificate now.

mv /opt/vmware/vcloud-director/certificates.ks /opt/vmware/vcloud-director/certificates.ks.original

2. Rename the migrated certificates.ks file. You are renaming custom certificate now to use it in production

mv /opt/vmware/vcloud-director/certificates.ks.migrated /opt/vmware/vcloud-director/certificates.ks

3. Shutdown vCloud Director management cell.

/opt/vmware/vcloud-director/bin/cell-management-tool -u administrator -p 'Password' cell --quiese true

/opt/vmware/vcloud-director/bin/cell-management-tool -u administrator -p 'Password' cell --maintanance true

/opt/vmware/vcloud-director/bin/cell-management-tool -u administrator -p 'Password' celll -s

4. Change the ownership on certificates.ks file

chown vcloud.vcloud /opt/vmware/vcloud-director/certificates.ks

5. Run the configuration tool to import the new certificate.

/opt/vmware/vcloud-director/bin/configure

If asked “Please enter the path to the Java keystore containing your SSL certificates and private keys:” enter the location you uploaded the file to. If our case: /opt/vmware/vcloud-director/certificates.ks. It will ask about https, console proxy and keystore password. Supply all.

If asked “Please enter the path to the Java keystore containing your SSL certificates and private keys:” enter the location you uploaded the file to. If our case: /opt/vmware/vcloud-director/certificates.ks. It will ask about https, console proxy and keystore password. Supply all.

Press Y wherever it prompt and You are Done!!

You need not to start vCD service manually now. It will automatically started.

Check the /cloud, /provider and :5480 portals and make sure it is accessible well from intranet and internet environments.

Some Useful Commands for HA Cluster Operations-

I am making it smallest font size to avoid any confusion in command. These are show commands and you can run to have deep inside of vCD HA cluster status.

sudo -i -u postgres /opt/vmware/vpostgres/current/bin/repmgr -f /opt/vmware/vpostgres/current/etc/repmgr.conf node status

sudo -i -u postgres /opt/vmware/vpostgres/current/bin/repmgr -f /opt/vmware/vpostgres/current/etc/repmgr.conf cluster show

sudo -i -u postgres /opt/vmware/vpostgres/current/bin/repmgr -f /opt/vmware/vpostgres/current/etc/repmgr.conf cluster matrix

sudo -i -u postgres /opt/vmware/vpostgres/current/bin/repmgr -f /opt/vmware/vpostgres/current/etc/repmgr.conf cluster crosscheck

systemctl status appliance-sync.timer #It is to check the time sync between all the nodes and need to run on all nodes seperately

Start the vCloud Director Appliance Deployment : Standby Node1

1. All process to deploy standby node is same except

- You will only seed info which is applicable for standby node at the time of deployment

- You just need to transfer Certificate.ks file to its default location and no other certificate replacement is required on standby node

Start the vCloud Director Appliance Deployment : Standby Node2

Same as above no change.

If everything goes well then in /cloud or /provider interface, you will see all three nodes with green ticket icon.

Now, All nodes are deploy in vCD. In mulit-cell deployment only one or two steps are additional here.

Load-Balancer Configuration : You need to check your existing load balancer configurations and need to modify them if require. If your load-balancer was already configured with in-use IP addresses then you just need to change in-use port from 443 to 8443. For me, LB was configured in NSX for internal traffic and F5 was there to entertain Internet traffic.

Load-Balancer Configuration : You need to check your existing load balancer configurations and need to modify them if require. If your load-balancer was already configured with in-use IP addresses then you just need to change in-use port from 443 to 8443. For me, LB was configured in NSX for internal traffic and F5 was there to entertain Internet traffic.

Would like to share some issues which I encountered during migration

Known Errors during above deployment and migration

Listing down where I was stuck

Issue 1: After deployment of first node, I got below error on VAMI interface

The deployment of the primary vCloud Director appliance fails because of insufficient access permissions to the NFS share. The appliance management user interface displays the message: No nodes found in cluster, this likely means PostgreSQL is not running on this node. The /opt/vmware/var/log/vcd/appliance-sync.log file contains an error message: creating appliance-nodes directory in the transfer share /usr/bin/mkdir: cannot create directory ‘/opt/vmware/vcloud-director/data/transfer/appliance-nodes’: Permission denied.

Solution : It means that NFS was not clean and PostgreSQL service couldn't be running. If you check above mentioned firstboot and setupvcd.log files then you will have idea. Delete all the content of NFS share and delete the existing node and retry deployment. No other fix.

Issue 2: sun.security.validator.ValidatorException: PKIX path validation failed: java.security.cert.CertPathValidatorException: signature check failed.

These were the log entires in cell.log and portal was not up

Solution: Edit the global.properties file in new primary cell and comment out (#) three lines which are associated with ssl connection and run configure command

/opt/vmware/vcloud-director/bin/configure --unattended-installation --database-type postgres --database-user vcloud --database-password db_password_new_primary --database-host eth1_ip_new_primary --database-port 5432 --database-name vcloud --database-ssl true --uuid --keystore /opt/vmware/vcloud-director/etc/certificates.ks --keystore-password root_password_new_primary --primary-ip appliance_eth0_ip --console-proxy-ip appliance_eth0_ip --console-proxy-port-https 8443

If it doesn't work then run below command

/opt/vmware/vcloud-director/bin/configure --unattended-installation --database-type postgres --database-user vcloud --database-password db_password_new_primary --database-host eth1_ip_new_primary --database-port 5432 --database-name vcloud --database-ssl false --uuid --keystore /opt/vmware/vcloud-director/etc/certificates.ks --keystore-password root_password_new_primary --primary-ip appliance_eth0_ip --console-proxy-ip appliance_eth0_ip --console-proxy-port-https 8443

It will work for sure as worked for me twice

Again run below command now

/opt/vmware/vcloud-director/bin/configure --unattended-installation --database-type postgres --database-user vcloud --database-password db_password_new_primary --database-host eth1_ip_new_primary --database-port 5432 --database-name vcloud --database-ssl true --uuid --keystore /opt/vmware/vcloud-director/etc/certificates.ks --keystore-password root_password_new_primary --primary-ip appliance_eth0_ip --console-proxy-ip appliance_eth0_ip --console-proxy-port-https 8443

Issue 3: DB transfer was failing, I couldn't capture the error but it was giving old cell's IP address error

Solution : When you prepare /opt/vmware/appliance/etc/pg_hba.d/external.txt file, I mentioned to put IP address of external DB so here you need to put IP address of your old cell as mentioned in above steps. In my case, I had to put IP address of eth1 nic of old cell

Issue 4: vCD Portal was up from internet and Intranet but VM's console was not accessible from Internet.

Solution: You need to make sure that in multi-cell deployment if you are using more than one LB then you will change the new cell's IP address in all LB configuration. In my case, Internet facing LB configuration change was missed so when we corrected it was resolved.

Issue 1: After deployment of first node, I got below error on VAMI interface

The deployment of the primary vCloud Director appliance fails because of insufficient access permissions to the NFS share. The appliance management user interface displays the message: No nodes found in cluster, this likely means PostgreSQL is not running on this node. The /opt/vmware/var/log/vcd/appliance-sync.log file contains an error message: creating appliance-nodes directory in the transfer share /usr/bin/mkdir: cannot create directory ‘/opt/vmware/vcloud-director/data/transfer/appliance-nodes’: Permission denied.

Solution : It means that NFS was not clean and PostgreSQL service couldn't be running. If you check above mentioned firstboot and setupvcd.log files then you will have idea. Delete all the content of NFS share and delete the existing node and retry deployment. No other fix.

Issue 2: sun.security.validator.ValidatorException: PKIX path validation failed: java.security.cert.CertPathValidatorException: signature check failed.

These were the log entires in cell.log and portal was not up

Solution: Edit the global.properties file in new primary cell and comment out (#) three lines which are associated with ssl connection and run configure command

/opt/vmware/vcloud-director/bin/configure --unattended-installation --database-type postgres --database-user vcloud --database-password db_password_new_primary --database-host eth1_ip_new_primary --database-port 5432 --database-name vcloud --database-ssl true --uuid --keystore /opt/vmware/vcloud-director/etc/certificates.ks --keystore-password root_password_new_primary --primary-ip appliance_eth0_ip --console-proxy-ip appliance_eth0_ip --console-proxy-port-https 8443

If it doesn't work then run below command

/opt/vmware/vcloud-director/bin/configure --unattended-installation --database-type postgres --database-user vcloud --database-password db_password_new_primary --database-host eth1_ip_new_primary --database-port 5432 --database-name vcloud --database-ssl false --uuid --keystore /opt/vmware/vcloud-director/etc/certificates.ks --keystore-password root_password_new_primary --primary-ip appliance_eth0_ip --console-proxy-ip appliance_eth0_ip --console-proxy-port-https 8443

It will work for sure as worked for me twice

Again run below command now

/opt/vmware/vcloud-director/bin/configure --unattended-installation --database-type postgres --database-user vcloud --database-password db_password_new_primary --database-host eth1_ip_new_primary --database-port 5432 --database-name vcloud --database-ssl true --uuid --keystore /opt/vmware/vcloud-director/etc/certificates.ks --keystore-password root_password_new_primary --primary-ip appliance_eth0_ip --console-proxy-ip appliance_eth0_ip --console-proxy-port-https 8443

Issue 3: DB transfer was failing, I couldn't capture the error but it was giving old cell's IP address error

Solution : When you prepare /opt/vmware/appliance/etc/pg_hba.d/external.txt file, I mentioned to put IP address of external DB so here you need to put IP address of your old cell as mentioned in above steps. In my case, I had to put IP address of eth1 nic of old cell

Issue 4: vCD Portal was up from internet and Intranet but VM's console was not accessible from Internet.

Solution: You need to make sure that in multi-cell deployment if you are using more than one LB then you will change the new cell's IP address in all LB configuration. In my case, Internet facing LB configuration change was missed so when we corrected it was resolved.

Upgrade from version 9.7 appliance to Cloud Director 10.1.2 appliance

Prerequisites

Take a snapshot of the primary vCloud Director appliance.

Log in to the vCenter Server instance on which resides the primary vCloud Director appliance of your database high availability cluster.

Navigate to the primary vCloud Director appliance, right-click it, and click Power > Shut Down Guest OS.

Right-click the appliance and click Snapshots > Take Snapshot. Enter a name and, optionally, a description for the snapshot, and click OK.

Right-click the vCloud Director appliance and click Power > Power On.

Verify that all nodes in your database high availability configuration are in a good state. See Check the Status of a Database High Availability Cluster.

Procedure

- In a Web browser, log in to the appliance management user interface of a vCloud Director appliance instance to identify the primary appliance, https://appliance_ip_address:5480.

- Make a note of the primary appliance name. You must upgrade the primary appliance before the standby and application cells. You must use the primary appliance when backing up the database. Note: You must upgrade primary cell first.

vCloud Director is distributed as an executable file with a name of the form VMware_vCloud_Director_v.v.v.v- nnnnnnnn_update. tar.gz, where v. v. v. v represents the product version and nnnnnnnn the build number. For example, VMware_vCloud_Director_10.0.0.4424-14420378_update.tar.gz. - Create the local-update-package directory in which to extract the update package.

#mkdir /tmp/local-update-package - Extract the update package in the newly created directory.

#cd /tmp

#tar -vzxf VMware_vCloud_Director_v.v.v.v-nnnnnnnn_update.tar.gz -C /tmp/local-update-package - Set the local-update-package directory as the update repository.

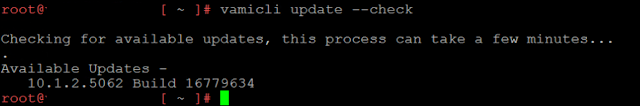

#vamicli update --repo file:///tmp/local-update-package - Check for updates to verify that you established correctly the repository.

#vamicli update --check

You will see similar output, if all went well - Shut down vCloud Director by running the following command

#/opt/vmware/vcloud-director/bin/cell-management-tool -u <admin username> cell --shutdown

OR

#Service vmware-vcd stop

You can use either way to stop vcd services - Apply the available upgrade

#vamicli update --install latest

Note: Follow all above steps on all cells one by one and restart each cell too after upgrading the application. Now login on Primary Cell only and upgrade the database schema - From the primary appliance, back up the vCloud Director appliance embedded database.

#/opt/vmware/appliance/bin/create-db-backup - From any appliance, run the vCloud Director database "upgrade" utility.

#/opt/vmware/vcloud-director/bin/upgrade - Reboot each vCloud Director appliance

#shutdown -r now

I will now share what is not there on vendor article-

1. Post application upgrade and login in html interface of vCD 10.1.2, you might notice that vCenter is showing disconnected and is not connecting post reconnect and refresh option. In that case, you need to follow below steps-

- Login to primary cell with root

- Run below command to accept the certificate (Issue is with certificate exchange of new vCD version 10.1.2 and needs to accept)

#opt/vmware/vcloud-director/bin/cell-management-tool trust-infra-certs --vsphere --unattended

For more info, refer the URL https://kb.vmware.com/s/article/78885

2. Post upgrading vCD application, postgres service might stop and while upgrading the database schema, you may see error, "unable to establish initial connection with database". To resolve this, either start the service manually or reboot the cell once.

That's all :) Hope it was helpful.

VMware References

1. vCD 9.7 Install and Upgrade guide

2. Above guide has all detail but just in case, if you need something specific. Here is Certificate replacement guide from VMware

3. Here is the database migration steps from VMware and same is mentioned in guide number 1.

4.Awesome article written by Richard Harris for the same process. Must check. I too learned from his experiences

3. Here is the database migration steps from VMware and same is mentioned in guide number 1.

4.Awesome article written by Richard Harris for the same process. Must check. I too learned from his experiences

0 Comments:

Post a Comment